From Theory to Reality: Advancements in Quantum Computing

Introduction

Quantum computing represents a paradigm shift in computational technology, promising to revolutionize fields ranging from cryptography and materials science to artificial intelligence and financial modeling. Unlike classical computers, which process information in binary form using bits, quantum computers leverage the principles of quantum mechanics to manipulate qubits—quantum bits that can exist in multiple states simultaneously. This capability allows quantum computers to solve certain problems exponentially faster than their classical counterparts.

The roots of quantum computing trace back to the early 20th century, when physicists like Max Planck and Albert Einstein laid the foundation for quantum theory. Over time, these theoretical insights have been refined and expanded, culminating in practical applications that are now poised to transform our understanding of computation.

Theoretical Foundations

At the heart of quantum computing are several key concepts derived from quantum mechanics:

- Superposition: A qubit can represent both 0 and 1 at the same time, enabling parallel processing of vast amounts of data.

- Entanglement: Qubits can become entangled, meaning the state of one qubit is directly related to the state of another, no matter the distance between them. This property is crucial for creating complex quantum algorithms.

- Quantum Interference: Manipulating the probabilities of qubit states through interference to enhance desired outcomes and suppress unwanted ones.

These principles are underpinned by mathematical frameworks such as linear algebra and probability theory, which provide the tools needed to design and analyze quantum algorithms.

Early Developments

The journey from theory to practical implementation began in earnest in the late 20th century. In 1981, physicist Richard Feynman proposed the idea of a quantum computer capable of simulating quantum systems more efficiently than classical machines. This was followed by David Deutsch’s formulation of the universal quantum Turing machine in 1985, laying the groundwork for quantum algorithms.

Pioneers like Peter Shor and Lov Grover made significant strides in the 1990s. Shor’s algorithm demonstrated the potential of quantum computers to factor large numbers exponentially faster than classical methods, posing a threat to widely used cryptographic systems. Grover’s algorithm, meanwhile, showed how quantum computers could speed up unsorted database searches.

Recent Advancements

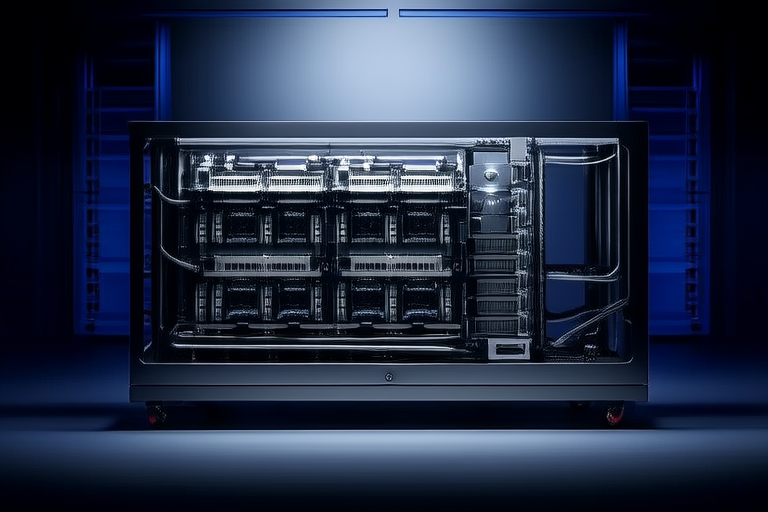

In recent years, substantial progress has been made in both hardware and software. Companies like IBM, Google, and Rigetti Computing have developed increasingly sophisticated quantum processors, with Google achieving “quantum supremacy” in 2019 by performing a calculation that would take the world’s most powerful supercomputer thousands of years to complete.

Research institutions such as MIT, Caltech, and the University of Toronto continue to push the boundaries of quantum technology. Innovations include new materials for qubit construction, error-correction techniques, and hybrid quantum-classical systems that combine the strengths of both paradigms.

Applications and Use Cases

Quantum computing holds immense promise across various industries:

- Cryptography: Quantum computers could break many encryption schemes currently in use, necessitating the development of quantum-resistant algorithms.

- Drug Discovery: Simulating molecular interactions at the quantum level could accelerate the discovery of new drugs and materials.

- Optimization Problems: Solving complex optimization tasks, such as supply chain management or financial portfolio optimization, could lead to significant efficiency gains.

For example, pharmaceutical companies are exploring how quantum simulations can predict the behavior of molecules more accurately, potentially reducing the time and cost required to develop new medicines.

Challenges and Future Prospects

Despite impressive advancements, several challenges remain:

- Error Rates: Maintaining coherence and minimizing errors in qubit operations is a major hurdle.

- Scalability: Building large-scale, fault-tolerant quantum computers requires overcoming significant engineering obstacles.

- Integration: Seamlessly integrating quantum and classical systems remains an open question.

Looking ahead, experts anticipate that within the next decade, we may see practical applications of quantum computing in industries ranging from finance to logistics. Continued investment in research and development will be crucial to realizing this vision.

Conclusion

The transition from theoretical concept to practical reality in quantum computing has been marked by significant milestones and innovations. From its origins in quantum theory to the cutting-edge technologies being developed today, quantum computing stands on the brink of transforming numerous sectors. While challenges persist, the potential benefits are too great to ignore. By fostering collaboration between academia, industry, and government, we can accelerate progress and unlock the full potential of this revolutionary technology.